Hey. If you’re here then you probably already use Obsidian or LM Studio or are interested in AI note taking or like Open Source Software. If not, then know Obsidian is a nice note taking app that saves your notes in markdown format as .md files. LM Studio is a nice app that lets you load different Large Language Models. IDK if LLMs make people more efficient in note taking but they generate text faster than I can write. Also, it’s a good title.

Install Obsidian. I’ll be using Mac but you can download it for Windows from here https://obsidian.md/ too.

I like to install things with brew if they are not available via App Store. If you have no idea what brew is then there is a short intro in my setting up your data science environment article.

Using Obsidian

Go through the installation unless it’s already installed. I don’t pay for any kind of subscription to save my Vault. The files are saved in my Onedrive folder. It doesn’t matter where you save but it’s nice to have it backed up and accessible on several devices.

If this is your first time then maybe play around with note taking before trying out AI note taking.

Click the Settings cog when you’re ready.

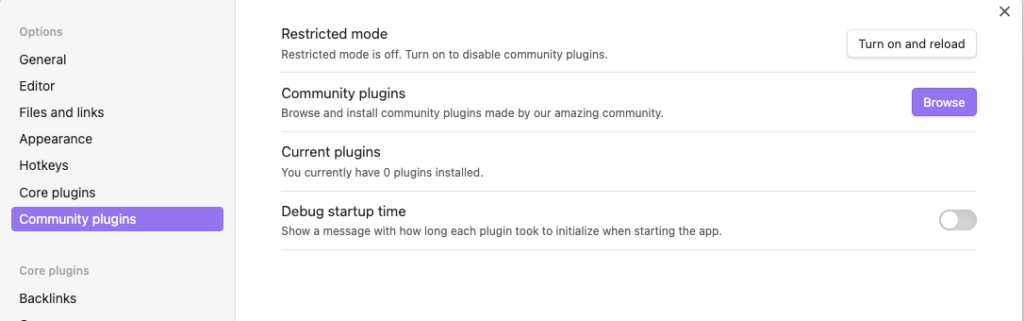

Enable Community plugins.

Get BMO Chatbot and Enable it.

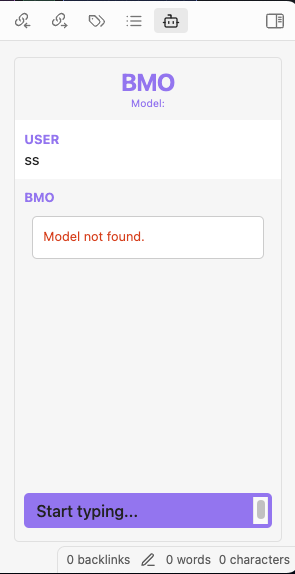

Now you see a new android icon on the sidebar.

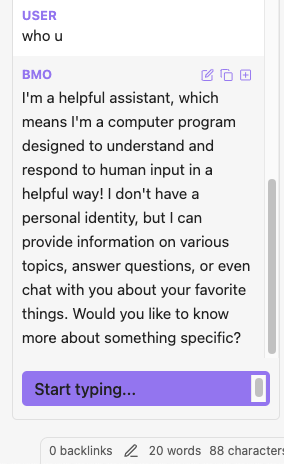

When you click it you’ll see the chat thingy.

It wont work. Shit.

Setting up the Large Language Model

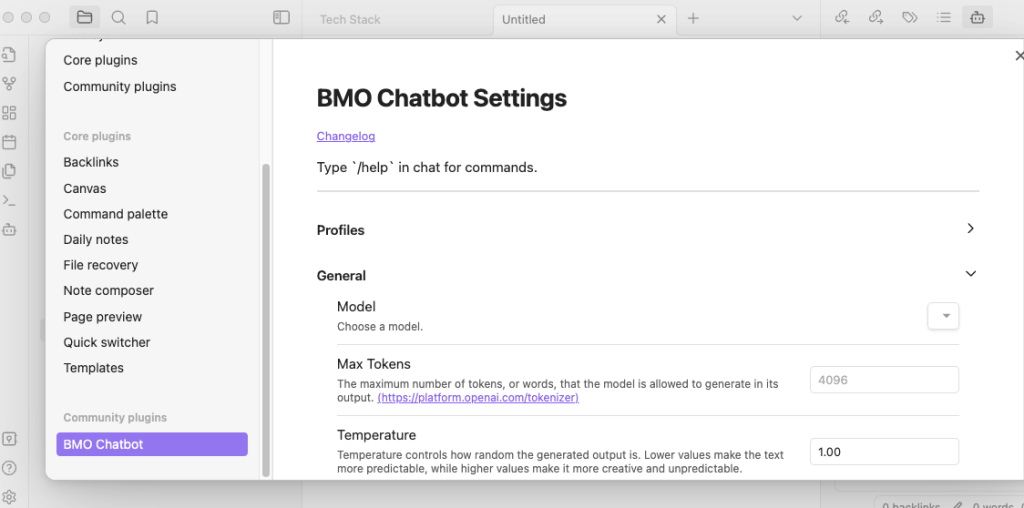

Go back to Settings. There is a new option called BMO Chatbot.

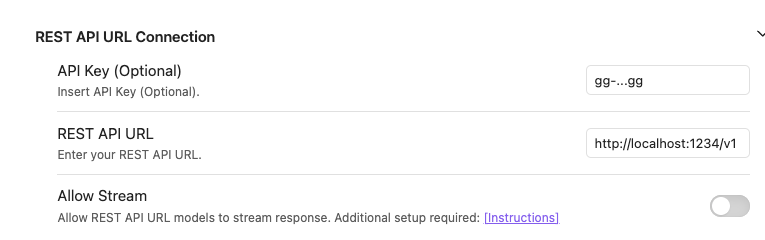

In Advanced you’ll see REST API URL

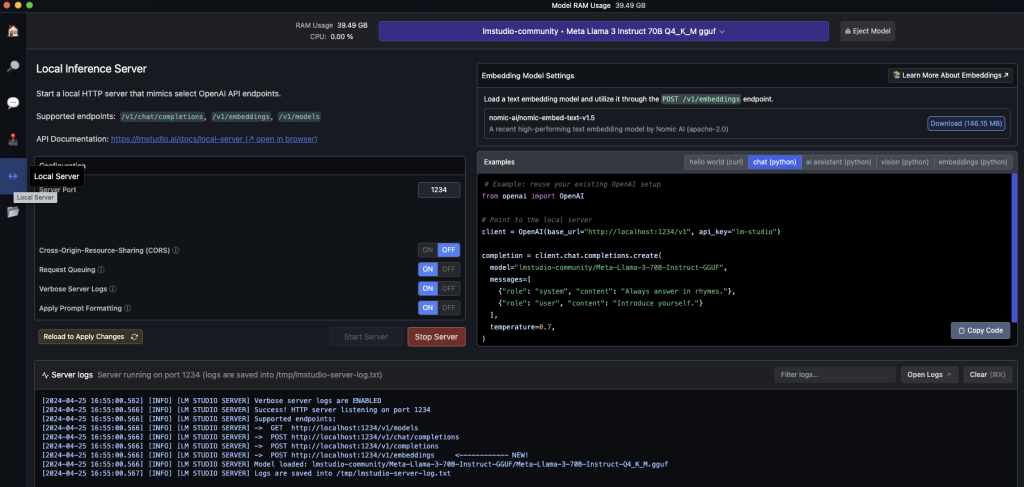

Open LM Studio. You can learn how to install and use local models from this article. It’s quick and you can get up an running in minutes. The steps are basically install LM Studio and pick a model.

In LM Studio click the local server icon, pick a model and start the server. I’m using a quantized version of Llama 70b. You can pick whatever fits your RAM. There is an okay model leaderboard here: https://chat.lmsys.org/

Some of the small models that work well include:

- Phi 3

- Llama 3 7b

- Mistral 7b

- Gemma 2b

The models are ever-changing and you can try different ones. Anyway, now you’re ready to stick this http://localhost:1234/v1/ for the REST API URL.

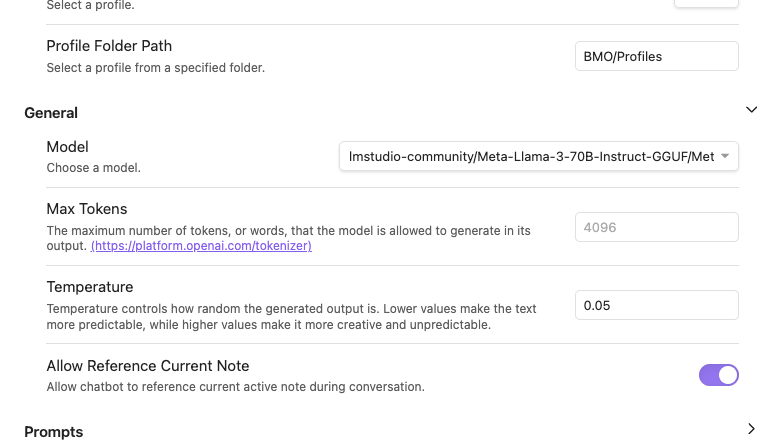

It wont work yet, you need to scroll up and pick the model. You can also change other settings. I prefer a low temperature and let it reference my current note.

AI note taking

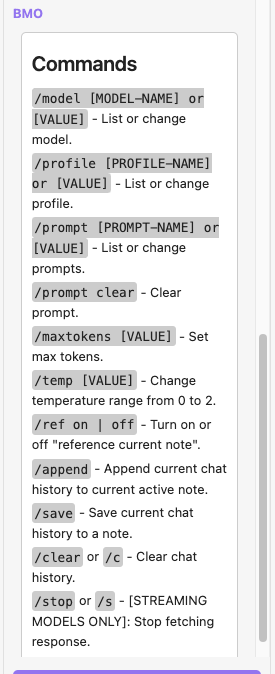

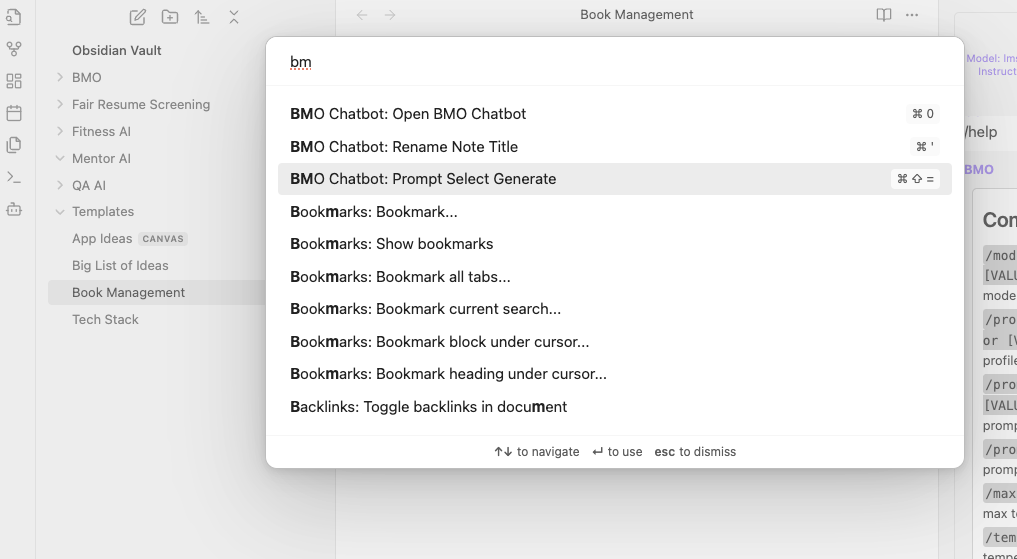

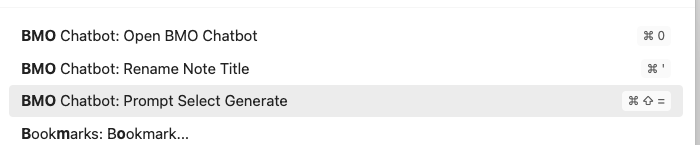

These are some handy shortcuts you can see in command palette.

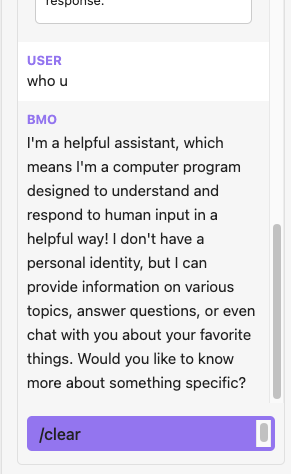

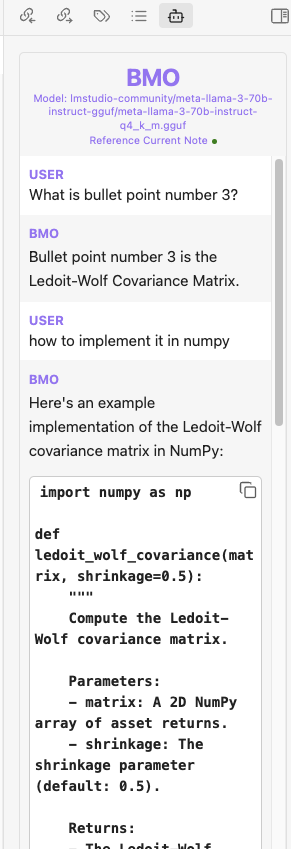

Now you can chat with it.

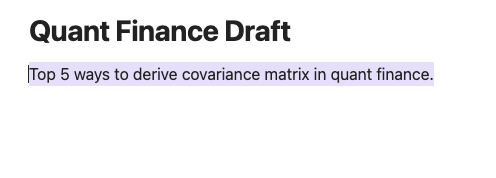

Well chat is nice but using it in the notes is cool.

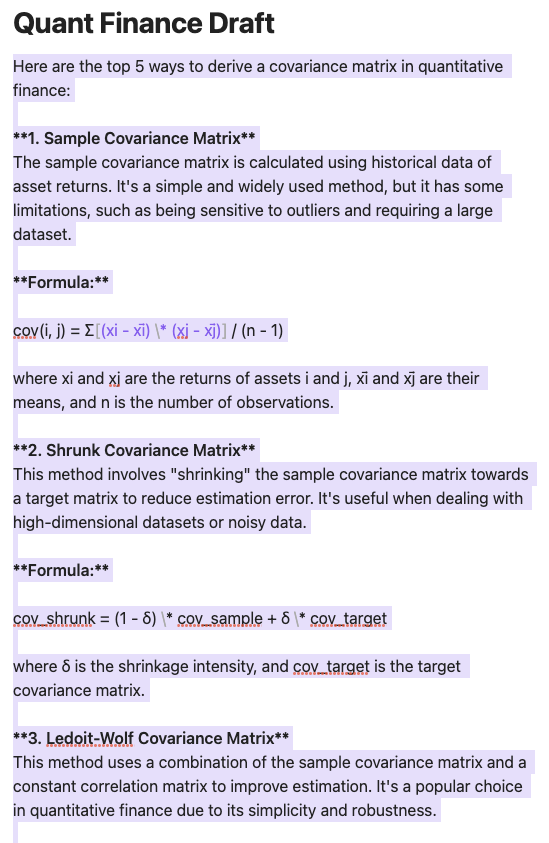

You can select text within a note and hit Command + Shift + = to use it as a prompt. The highlighted text will be used as a prompt.

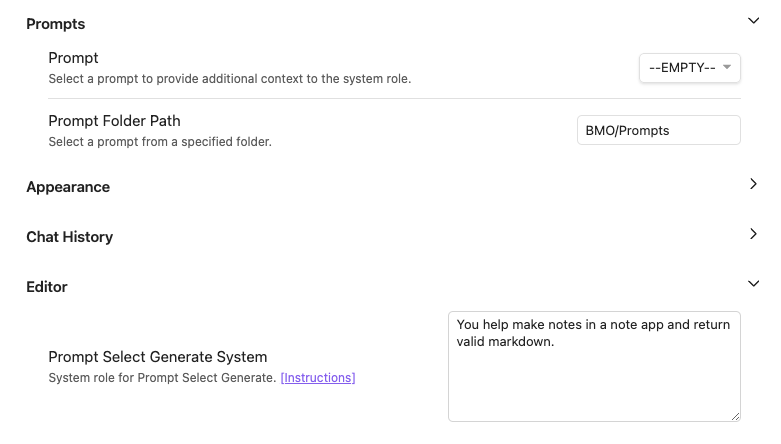

If you don’t want to use the shortcut click around in the command palette till you see Prompt Select Generate.

Now we wait.

It did a good job. In chat you can ask questions about the current note that is open. Use /clear to clear your nonsense inputs.

I asked about the note it generated. To be honest it’s much nicer if you have domain knowledge already. This is because you know when the LLM is wrong.

You might want to use /clear often if you’ve asked many questions. It did not create a good summary and referenced things from the chat along with the note when asked to ‘create a summary of of the note’.

You can go back to the settings and play with the prompt when you’re ready. to improve it further.

Further reading

- You can fine-tune domain specific models if you need this for school.

- There are other commands like /save which might be nice.