Yew,

LM Studio provides an easy to use GUI to try and test several models because it has a search to discover new models within the tool. It allows people to locally run and download GGUF models. These models are usually available quantized which requires a lot less memory. Quantized models just mean they represent weights in lower precision. They aren’t particularly worse in real world usage until you start using really low precision (4 bit for example).

If you don’t have homebrew or a data science environment follow this guide:

Alternatively you can follow a simpler older version of that guide here on Windows or Mac.

Installing LM Studio

On Mac with brew use this

https://formulae.brew.sh/cask/lm-studio

Alternatively you can go here and install it on Windows or Mac: https://lmstudio.ai/

Once ready open it.

A lot of models have high ram requirements. One that should work for everyone is Phi-2. It’s a model that Microsoft open sourced (and made free for commercial use at the start of 2024).

Using a model

Search for Phi

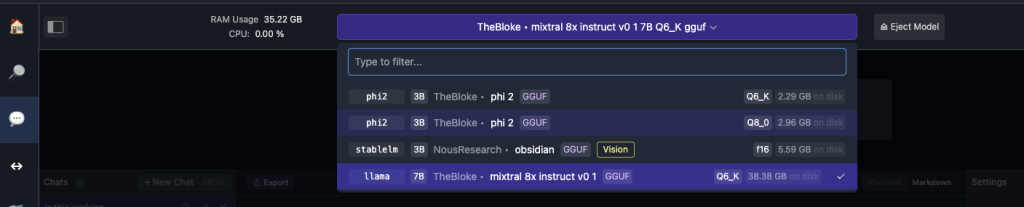

TheBloke is a person that usually releases high quality GGUF models with different quantization.

Pick one with memory requirements that suit you. The biggest one needs 2.96 which is still not very much.

Once downloaded go to the Chat area and load the model from the top.

Talk to it and have fun.

Running a local server

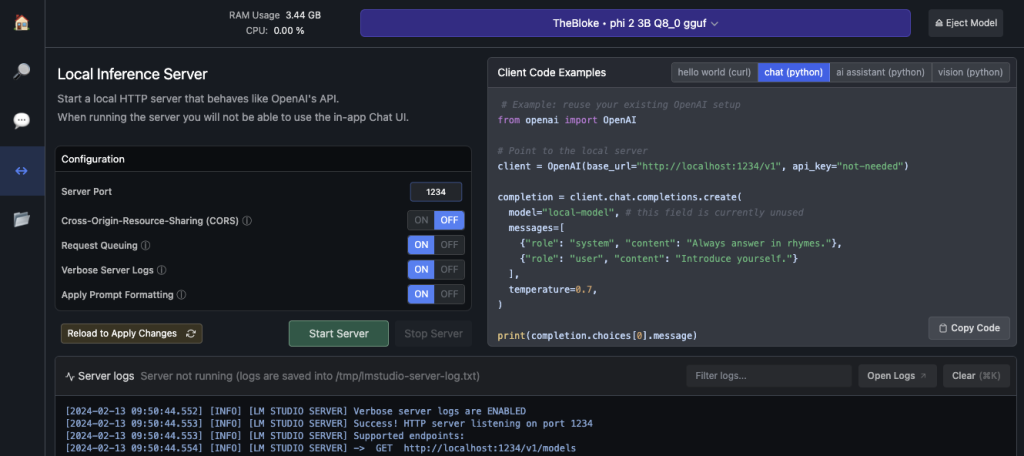

If you want to access it via script you can start a local server.

Use it in python and do cool things via script. As long as the model uses ChatML format it can be used with OpenAI’s package, which is a bonus.

Bye.

1 comment

Comments are closed.